|

| Photo by Alex Knight on Unsplash |

Building AI assistance in existing legacy applications is gaining a lot of momentum. An AI assistant like a chatbot can provide users with a unified experience and enable them to perform functionalities across multiple modules through a single interface.

In our article, we'll see how to leverage Spring AI to build an AI assistant. We'll demonstrate how to seamlessly reuse existing application services and functions alongside LLM capabilities.

Function Calling Concept

An LLM can respond to an application request in multiple ways:

- LLM responds from its training data

- LLM looks for the information provided in the prompt to respond to the query

- LLM has a callback function information in the prompt, that can help get the response

Let's try to understand the third option, Spring AI's Function calling API feature:

Next, we'll explore Spring AI's support for function calling in detail.

Demo Application Design and Implementation

This section will focus on designing and implementing an AI assistance feature for a legacy application, leveraging the Function Calling API.

Use Case

Let's consider an application that helps maintain a catalog of books. The application enables the users to:

- Save catalogs of books

- Search books by title, author, publication, etc.

Prerequisites

For the sample application, we'll use the popular in-memory HSQLDB database. So, let's begin by defining the data model for the author, publication, and books table:

-- Create the author table

CREATE TABLE author (

author_id INTEGER

GENERATED BY DEFAULT AS IDENTITY

(START WITH 1, INCREMENT BY 1) PRIMARY KEY,

name VARCHAR(255) NOT NULL

);

-- Create the publication table

CREATE TABLE publication (

publication_id INTEGER

GENERATED BY DEFAULT AS IDENTITY

(START WITH 1, INCREMENT BY 1) PRIMARY KEY,

name VARCHAR(255) NOT NULL

);

-- Create the book table

CREATE TABLE book (

book_id INTEGER

GENERATED BY DEFAULT AS IDENTITY

(START WITH 1, INCREMENT BY 1) PRIMARY KEY,

name VARCHAR(255) NOT NULL,

author_id INTEGER NOT NULL,

genre VARCHAR(100),

publication_id INTEGER NOT NULL,

CONSTRAINT fk_author FOREIGN KEY (author_id)

REFERENCES author(author_id) ON DELETE CASCADE,

CONSTRAINT fk_publication

FOREIGN KEY (publication_id)

REFERENCES publication(publication_id) ON DELETE CASCADE

);The book table refers to the author and publication tables for author_id and publication_id columns.

Also, let's insert some data into the tables:

-- Insert data into the author table

INSERT INTO author (name) VALUES ('J.K. Rowling');

INSERT INTO author (name) VALUES ('George R.R. Martin');

--and so on..

-- Insert data into the publication table

INSERT INTO publication (name) VALUES ('Bloomsbury');

INSERT INTO publication (name) VALUES ('Bantam Books');

--and so on..

-- Insert data into the book table

INSERT INTO book (name, author_id, genre, publication_id)

VALUES ('Harry Potter and the Philosopher''s Stone', 1, 'Fantasy', 1);

INSERT INTO book (name, author_id, genre, publication_id)

VALUES ('Harry Potter and the Chamber of Secrets', 1, 'Fantasy', 1);

--and so on..Spring AI supports function calling for multiple AI models, including Anthropic Claude, Groq, OpenAI, Mistral AI, etc. However, in this article, we'll use OpenAI's LLM service, whose subscription is necessary to invoke its API.

Further, the best way to get the Spring dependencies in the application is by using the Spring initializer web page. Nevertheless, we'll specify some important libraries:

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-starter-model-openai</artifactId>

<version>1.0.0-M8</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-jpa</artifactId>

<version>3.3.2</version>

</dependency>

<dependency>

<groupId>org.hsqldb</groupId>

<artifactId>hsqldb</artifactId>

<version>2.7.3</version>

</dependency>We've included the Spring OpenAI starter, Spring Data JPA starter, and HSQLDB client libraries. Anyways, more details are available in our repository.

In the next section, we'll define a few Spring components for implementing the demo application's functionalities.

Basic Spring Components Without AI

First, let's take a look at the basic components used in the demo application without the AI support:

We've defined three entity bean classes: Book, Publication, and Author. These are in line with the database tables defined in the previous section. Furthermore, there are three Spring repository classes for the entities.

The BookManagementService class helps integrate with the database with the help of the three repository classes:

@Service

public class BookManagementService {

@Autowired

private BookRepository bookRepository;

@Autowired

private AuthorRepository authorRepository;

@Autowired

private PublicationRepository publicationRepository;

public Integer saveBook(Book book) {

return bookRepository.save(book).getBookId();

}

public Integer saveBook(Book book, Publication publication, Author author) {

book.setPublication(publication);

book.setAuthor(author);

return bookRepository.save(book).getBookId();

}

public List<Book> searchBooksByAuthor(String authorName) {

List<Book> books = bookRepository.findByAuthorNameIgnoreCase(authorName)

.orElse(new ArrayList<Book>());

return books;

}

public Author getAuthorDetailsByName(String name) {

return authorRepository.findByName(name).orElse(new Author());

}

public Publication getPublicationDetailsByName(String name) {

return publicationRepository.findByName(name)

.orElse(new Publication());

}

}Basic Spring Components With AI

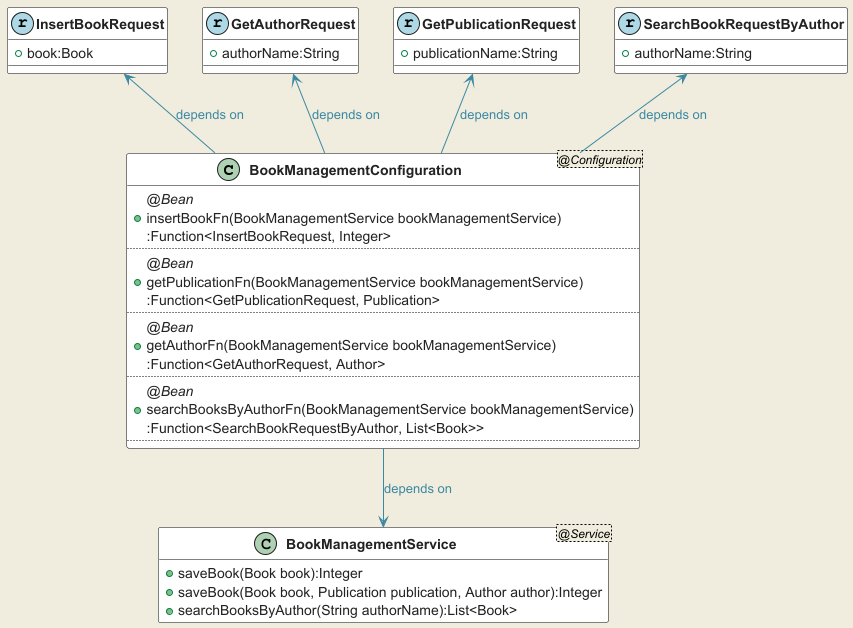

Fortunately, we don't have to change anything on the existing components. Rather, to develop the AI support on the application, we'll add a few additional components:

So, to enable AI in the application, we've introduced the Spring configuration class that exposes a few function beans. These function beans reuse the methods of the BookManagementService class.

Let's see what the configuration class looks like:

@Configuration

public class BookManagementConfiguration {

@Bean

@Description("insert a book. The book id is identified by bookId. "

+ "The book name is identified by name. "

+ "The genre is identified by genre. "

+ "The author is identified by author. "

+ "The publication is identified by publication. "

+ "The user must provide the book name, author name, genre and publication name."

)

Function<InsertBookRequest, Integer> insertBookFn(BookManagementService bookManagementService) {

return insertBookRequest -> bookManagementService.saveBook(insertBookRequest.book(),

insertBookRequest.publication(), insertBookRequest.author());

}

@Bean

@Description("get the publication details. "

+ "The publication name is identified by publicationName.")

Function<GetPublicationRequest, Publication> getPublicationFn(BookManagementService bookManagementService) {

return getPublicationRequest -> bookManagementService.getPublicationDetailsByName(getPublicationRequest.publicationName());

}

@Bean

@Description("get the author details. "

+ "The author name is identified by authorName.")

Function<GetAuthorRequest, Author> getAuthorFn(BookManagementService bookManagementService) {

return getAuthorRequest -> bookManagementService.getAuthorDetailsByName(getAuthorRequest.authorName());

}

@Bean

@Description("Fetch all books written by an author by his name. "

+ "The author name is identified by authorName."

+ "If you don't find any books for the author just respond"

+ "- Sorry, I could not find anything related to the author")

Function<SearchBookRequestByAuthor, List<Book>> searchBooksByAuthorFn(BookManagementService bookManagementService) {

return searchBookRequestByAuthor -> bookManagementService.searchBooksByAuthor(searchBookRequestByAuthor.authorName());

}

}The @Description annotation is like a guidebook for the LLM. It describes the mapping between the user entries and the request object. With this information, Spring AI forms the callback function metadata. Later, using the metadata the LLM chooses the correct function and builds its JSON request argument.

Later, the JSON arguments are converted into the Java records:

record SearchBookRequestByAuthor(String authorName) {}

record InsertBookRequest(Book book, Publication publication, Author author) {}

record GetAuthorRequest(String authorName) {}

record GetPublicationRequest(String publicationName) {}Function Callback in Action

Now that we have discussed the foundation classes, we can see the Function Calling API in action.

Implementation

In a previous section, we configured the properties for the Spring OpenAI starter library. This allows us to autowire the ChatModel bean into Spring service classes. Further, we'll use this bean to register the callback functions:

public void whenUserGivesInstructions_thenRespond(String userQuery) {

String systemInstruction = "While answering, please stick to the"

+ " context provided by the function."

+ "If the book with the same title and"

+ "author already exists, please abort the insert and inform the same.";

OpenAiChatOptions openAiChatOptions = OpenAiChatOptions.builder()

.withFunctions(

Set.of("searchBooksByAuthorFn", "insertBookFn", "getPublicationFn", "getAuthorFn")

).build();

Prompt prompt = new Prompt(userQuery + systemInstruction, openAiChatOptions);

ChatResponse chatResponse = chatModel.call(prompt);

String response = chatResponse.getResult()

.getOutput()

.getContent();

logger.info("Response from OpenAI LLM: {}", response);

}The method constructs a Prompt object, combining the user query with a special system instruction. The system instruction restricts the LLM's response to the application's context. Additionally, It prevents duplicate book entries in the application.

Later, we instantiate the OpenAIChatOptions class to register the function beans declared in the Spring configuration class. Finally, the chatModel object calls the OpenAI API to generate the response.

Search Books By Authors

Moving on, let's look at the responses received from the LLM on different user queries:

User Query:

I would like to know at most two books by the authors

Mark Twain, Stephen King, and Leo Tolstoy.LLM Response:

Here are two books from each of the requested authors:

**Mark Twain:**

1. The Adventures of Tom Sawyer (Genre: Adventure)

2. Adventures of Huckleberry Finn (Genre: Adventure)

**Stephen King:**

1. The Shining (Genre: Horror)

2. Carrie (Genre: Horror)

**Leo Tolstoy:**

Sorry, I could not find anything related to the author.Upon receiving the request, the LLM instructed the application to execute searchBooksByAuthorFn. Finally, the application successfully responded to the user query.

Let's highlight some of the notable outcomes:

- Interestingly, the LLM responded with the details of only two books, though the application has more books from the authors. The LLM got the context from the function's output and modified it slightly to respond with a more accurate answer.

- The LLM requested the application to execute the same function multiple times with different author names.

- The LLM could not provide any books by Leo Tolstoy because the underlying function didn't fetch any information about them. Besides, the LLM was explicitly instructed to limit its responses to the provided context.

Insert Books

Let's see what happens when the user provides the instruction to insert a book into the application.

User Query:

Insert the book Sense and Sensibility by the author Jane Austen,

published by Penguin Books in the genre Romance.LLM Response:

The book "Sense and Sensibility" by Jane Austen has been successfully inserted,

published by Penguin Books in the genre Romance.That was pretty straightforward. Let's see how LLM handles the insert request of an already existing book.

User Query:

Insert the book ABC Murders by the author Agatha Christie,

published by Penguin Books in the genre Romance.LLM Response:

The book "The ABC Murders" by Agatha Christie already exists. Aborting the insert.The LLM first calls the searchBooksByAuthorFn function to confirm that no duplicate entry exists and then proceeds to insert the record by calling the insertBookFn function. This demonstrates that the LLM can make decisions by chaining multiple functions.

Conclusion

However, complex applications would need Spring AI's support for the RAG technique, Structured Output APIs and Advisors API. They can further augment the Function Calling APIs. Unfortunately, it also increases the implementation costs.

Finally, a well-structured and well-written prompt is equally important and would help the application immensely.

Visit our GitHub repository to access the article's source code.

Comments

Post a Comment